As the Continuous Delivery is spreading, more and more CI servers are working on daily builds, tests and releases. With the growth of the companies' codebase we are repeating almost the same steps with slight changes to implement CI/CD coverage for the new modules and projects, but the maintenance or update of these steps is getting harder. In a monolithic environment the growth is not so remarkable, but as we are moving toward the microservice paradigm with containerization, the maintenance effort of these configurations could jump quickly.

The majority of the servers I saw was run the same sequence of checkout, build, package, deploy, tests steps and finally marked artifact as releasable or released it into the production environment.

Also all of these servers were guarded by intensively and only some chosen ones were able to change configs or could consider to upgrade to a more recent version with shaky hands and unpredictable compatibility issues. We could agree on the high importance of these servers and their unique role, but how could we mitigate the risk and dependency on these servers? How could we repeat pipeline assembling steps in a reliable way and make predictable the outcome?

Purpose of this document

This is the first part of the series of tutorials about Jenkins scripting to achieve a fully automated, decentralized and replaceable delivery pipeline architecture with better flexibility than the monolithic, centralized Jenkins setup. In the series I’m talking about the scripting basics (here), some intermediate steps (xml configuration, modularization, common tasks, DslFactory, etc.) and the extension of the plugin function with own library routines. In this part I’m explaining only the basics of job and view creation, the syntax and a basic pipeline generation with some recommendations.

Monolithic Jenkins challenges

The classical server setup is built around a central Jenkins server, supported by generic and slightly specialized Slaves where the carefully configured jobs are assigned to these agents based on the requested behavior and guarded by few experts to keep all stuff up and running and intact. The update of these servers and their plugins are quite rare event, because the compatibility is a challenge (the search keyword: “jenkins upgrade issues” has 65 million results on google at the moment). So the majority of Jenkins projects are running on outdated Jenkins versions just to keep the CD pipeline up and running, because the continuity of the operation has high importance.

Another interesting point is the disallowance of experimental moves on the central server. If you have a pet server instead of a cattle, (see: http://bit.ly/1KItvy9) the testing of new plugins and functionalities is something that makes you get totally scared and worry about the stability of your precious, fine polished build environment.

Breaking out of the pet world

The limitations mentioned before are the results of Conway’s law and blocking the evolution of your organization. You need to break through it to enable higher productivity and faster go to market with your freshly implemented features. The solution is simple and existing since decades when you have a problem that should be solved dynamically, depending on several consideration factors.

You need a programming language...

You need it to code down your delivery pipeline once and run it every time to regenerate it when you need.

You have developers working your product and writing code every day. What could be more natural then a scripted delivery pipeline as a solution for your customization needs. Comparing with some convention over configuration based server where you are blocked by the limitations of the platform here you have the largest freedom to tailor the system to your needs.

A scripted version of delivery pipeline could guarantee you will get the same results every time when you rerun your script in repeatable and reliable way. Also providing the versioning of your pipelines and the possibility of fast rollback without initiation a long recovery process of the server itself. The common parts could be shared between a team to build up a general purpose, but fully supportive build platform based on their experience and representing the DRY principle.

This is exactly the Jenkin DSL plugin's purpose, to give you the highest freedom of the scripting without the limitations of version lock-ins, plugin feature limitation and you could leave behind the pet like Jenkins server’s era.

With efficient scripting you could earn:

- Jenkins version independence (upgrade is not a problem anymore)

- Flexibility of scripting (All Groovy features are available)

- Automation of repeating tasks. Same problems can be solved on same way

- Standardization of delivery pipelines, based on a common framework

- Historization of the pipeline. Can be stored in your code repository

- You could break out from the grip of the monolith and setup a new server anytime

- Change in the implementation can be applied on all servers and jobs quickly

Simple example

As a starting point we need a simple job definition to see the syntax and understand the basics of Jenkins scripting. First we need to prepare Jenkins to run our scripts, then create script running job manually (bahh!) finally we should run the job and verify the results.

I assume you are using a non-production Jenkins server :)

Prepare Jenkins

To setup your first build config you need to make Jenkins able to run your scripts. In the example I'm using Github as Git repository and Maven as build tool. I assume you have Java Development Kit installed on your machine. Please setup Java and Maven on your Jenkins first.

When these prerequisites are done we need some customization to make our server ready to run the seed job.

As a first step please install the following plugins:

- Jenkins Job DSL plugin. The plugin has some dependencies, but after the server restart you could create your very first job script!

- Git plugin to access Github repositories

Create a seed job

To generate other jobs we need a starting point as a 'seed' or 'boostrap' job. This one is created now manually, but I'll show an automation option in the later part of the series. Let's see the steps:

| Figure | Step |

|---|---|

|

| Click on New Item |

|

| Choose Freestyle project and type 'seed' as job name |

|

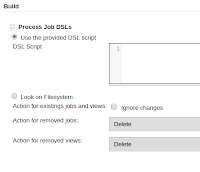

| Add a new build step to the new project: Process Job DSLs |

|

| Configure the step as you can see on the picture |

|

| Copy the Job creation script into to edit field and save |

Run and verify

Then save the job and run it. The console output is something like this:

Then you have a new job without any interaction with the New Item button:

If you properly configured your Jenkins and no firewall restrictions around, then you could click on the 'Demo build job' and see how it checks out the demo code and compiles with maven.

Congratulations! Your first, scripted job is created! Good job!

Explanation of the script

The first noticeable fact about your script is the format. This is groovy closure, that defines your job and it can mainly interpret the predefined and implemented entries. In the following articles I'll explain how could you extend the DSL commands with custom XML settings or library definitions.

- In this line we are defining the closure as job and specifying the name. The name is mandatory!

- Here's the definition start for version controller configuration

- We are using git.

- Defining the remote endpoint for git.

- Specifying the URL of the repository

- Closing the block

- We are operation only on the master branch at the moment

- To speed up the checkout process we are using shallow cloning.

- closing block

- the definition of the checkout part is done

- Start the definition of build steps block

- we are using maven to compile the code as demonstration

- ..

- ..

That's it for first. The DSL plugin during the build is taking your script and the closure entries are converted to XML configuration that could be interpreted by Jenkins. You could add more then one of these closure, so feel free to try it out! The outcome of the script run should look like this:

Main DSL building blocks

The DSL plugin manages three main closure blocks: Jobs, Views and Folders.

The Jobs are well known elements of Jenkins, as building blocks of our delivery pipelines. They are responsible for the checkout/build/release/etc. processes. In a job we could define sophisticated logic for the source code repository access, pre build steps for preparations, multiple build steps and some post activities to finish the job and route the flow to the next step(s).

The View closure could define different viewing styles for our jobs and group them together based on regex patterns or downstream dependencies. In the views we could define a list of grouped jobs based on a criteria, but not separating these jobs from the global view. If you want real separation, consider using Folders.

|

| Build pipeline view example |

|

| Delivery pipeline view example |

Defining jobs

With the jobs closure you could imitate the Jenkins job creation functionality and you are able to define all built in job types and some additional ones:

The standard job(name) definition is an alias for the freeStyleJob, so you could shorten it ;) This list is just showing the current status of possibilities (13/06/2015), so please check the Job command documentation for the recent status.

Let's start the job definition with some version controller related hints:

Let's start the job definition with some version controller related hints:

Triggering

Triggering is about to define a condition when your job should get started. You could define multiple entries with different types, but the most often used ones are the poll and cron.

Polling: If you are using commit hooks on your server, you don't need to poll the repository regularly, otherwise the polling syntax could help. Polling uses classical cron syntax, but it has a list of predefined aliases to make it more readable:

Cron: For non-SCM based activities like cleanup, regular checks or other regular activities you could schedule the periodical job run. This entry is independent from your SCM definition!

URL: This is an interesting option to poll a URL run some jobs if changed

Build steps

In the build steps you have a big range of opportunities to run I'm just covering the maven/shell and additional DSL scripting.

Maven: Jenkins is delivering first class maven support to you either on job or build step level. You could execute simple goals or fully configure maven for your needs:

Shell script: If you need some shell magic in your build, there's no problem. You could specify OS level scripting, but beware of the host operating system!

DSL script: As a l'art pour l'art action you could define additional DSL script running jobs to represent a complex build/delivery mechanism. You are not locked in into one seed/dsl script. Groovy has a multiline string capability to enable string definition in more readable format if you put your string between """ characters.

Job hiearchy

Jenkins jobs could run as standalone units or as part of a flow. Every pipeline could be a graph (usually a special one: tree) and we could define this structure on DSL level too. On job could have multiple children (downstream job) to run parallel tasks and we could specify the entry condition to move from one job to another

- SUCCESS

- UNSTABLE

- UNSTABLE_OR_BETTER

- UNSTABLE_OR_WORSE

- FAILED

The definition of downstream items could be static of programmatic. The v1.33 of the plugin is now supporting upstreams where you could specify prerequisite runs for your job, but I haven't tried yet. If you have any experiences with it please let me know. This section will be updated when I evaluated it.

Workspaces

Working on shared codebase (at least within your team) needs to run your pipelines safely separated from changes committed during the run. You couldn't accept any changes when the pipeline started and should be treated as new pipeline start. If you run your Jenkins in distributed environment with slaves (VM or Docker based ones) you should deliver all required artifacts before you start the next job. Thats why Clone Workspace SCM plugin designed for Jenkins, to move filtered sets of files between delivery stages. Not only the originally cloned source can be move, but all created artifacts and reports could be reused in a later phase of pipeline. A typical case for me when I'm moving the source code to the build related steps, but the Docker image creation only needs the generated artifact, so I'm only moving the binaries to that job. With the plugin you could define the include/exclude patterns of the files, cloning criteria, archiving mode and other properties. Let's see them by their position number:

- Include pattern in ant style

- Exclude patterns

- Cloning criteria: Any - the recent completed build, Not failed - recent successful/unstable build, * or Successful - recent stable build

- Archiving mode: TAR or ZIP

- If true, don't use the Ant default file glob excludes. I'm always setting it to true, because I have no idea what does it mean :)

- cloneWorkspaceClosure - I never had to use to specify some very peculiar settings, so this is always null

The most generic example:

Defining views

If you generate your jobs by scripts the number of these jobs could grow quickly and a good grouping could help us - and others - to get lost between dozens or hundreds of autogenerated jobs. Fortunately the recent version is supporting multiple view out of box, no any XML hacking necessary to create some nice looking job lists. Common point between these views it the possibility of regular expression based listing and a good naming standard for your jobs could play nicely with this feature. An example:

sprintf('%s-%s-%s-%02d-build, projectName, componentName, branchName, stepNumber)

I'm just explaining three types of views, because a more detailed documentation is available for all view types.

List view

The most common view in Jenkins. Personally I prefer to exclude the weather column from the definition. You could specify jobs one-by-one or use a regular expression to group them based their pattern.

Here's the view definiton for this screenshot:

Here's the view definiton for this screenshot:

Build pipeline view

The build pipeline is working on the downstream definition of your jobs to put together a hierarchy (could be a tree too). You just need to specify the order in the publisher part and it generates the structure and their statucs for you:

The most simple build pipeline code

Delivery pipeline view

The delivery pipeline is a bit complex structure, because you could specify stages and tasks within stages. It has it's own deliveryPipelineConfiguration entry in the job closure to describe the job's position within the pipeline (see the demo code)

Here's the simplest pipeline definition for the screenshot above:

Summary

Congratulations! You arrived to the end of part one you know all the foundations of the DSL scripting. I hope you followed some links I specified to digg deeper into the job/view definitions and feel free to ask me if you have any questions.

Now you are able to define simple flows in Jenkins, customize some views to create nice looking dashboards and make it completely autogenerated by a script.

In the next part I'm going to talk about seed/bootstrap jobs, pros and cons of this approach and I put together a small pipeline as a blueprint.

Thank you for your time and all feedbacks are welcome! :)

Thank you for your time and all feedbacks are welcome! :)

Interesting approach. One thing is not clear though.

ReplyDeleteHow do you cope with the huge amount of jobs when the number of project start to grow? I've got 20-30 projects running at the same time. Each one is at least 7-8 stages. Which means ~200 Jenkins jobs and a very long groovy script to generate all of them.

Most of them are exactly the same, with a different name (i.e. [proj_name]-[phase_name]) and git repository URL. Each projects have a similar definition: Proj1-step1 -> Proj1-step2 -> Proj1-step3, Proj2-step1 -> Proj2-step2 -> Proj2-step3 where step1, step2 and step3 are almost exactly the same, except for the name and the git source repository and the name of the next project down the chain.

Doesn't this goes agains the DRY principle?

I've explored two solutions:

1. Use Job DSL to define parametrised jobs that describe a step and build flow to run and orchestrate the steps.

2. Resort to a groovy library that can encapsulate the definition of the steps so that on each project we can call something like: new MyComplexListOfSteps(params) as proposed in https://github.com/sheehan/job-dsl-gradle-example.

Both solution have pro and cons and I'm still unable to address teh following issues:

- If I organise the steps inside folders per project, there's no plugin that will read the state of a folder in a wall

friendly way to be displayed on a screen in the middle of the office

- With time the build number of the various steps will grow out of sync and will make it harder to track the overall flow

of a single build

It would be nice to get your opinion on these issues if you have some time to comment.

Over all great post! I'm looking forward to the follow ups!

Dear Fabio,

DeleteI absolutely agree with you and the later parts I'm showing how I handle huge amount of projects, but this is only about the basics.

Let's see what I'm doing to handle the large number of projects

- Multiple Jenkins instances, dedicated to specific area. This gives the freedom to the teams, to not depend on others by plugins/versions and development cycle. With more Jenkins instances no need to see hundreds of jobs in one place. I'm not recommending to use a central Jenkins to host all things.

- We have a complex pipeline framework to generate pipeline for our projects, all repeated steps are extracted to an independent Step class (checkout, build, etc...) and linked together by patterns. For this you need a DslFactory instance that will be explained in the Part 3 (spoiler alert! :) ). The pipeline is hosted in a shared repo and their checked out together with the dedicated boostrap job definition. (In Part 4 I'm telling the details of library creation for DSL plugin)

- To use building resources efficiently the Jenkins agents are dockerized and using the same docker clusters. We have totally separated build environments, but we could save costs on shared resources (will be explained Part 5 or 6)

- Our Jenkins instances are disposable and puppetized/dockerized (in progress). You are allowed to change anything on your instance to try new ideas then you could issue a pull request to make it available for everyone. Thanks to the Jenkins automation in this year we are upgraded our Jenkins servers safely every time, when a new version released (and the plugins too.).

A puppetized Jenkins can be generated within 8 mins, a dockerized takes a minute with image pulling. The already dockerized Jenkins servers can be run on the build server hosts or you could start them locally on your machine if you need. You can get a full pipeline even on your machine.

I'm still working on the Part 3 I hope you'll like it :)

This comment has been removed by the author.

ReplyDeleteOh Wow. I didn't think you were writing a whole series of posts on Jenkins. I'm looking forward to the end result.

ReplyDeleteI've already learnt of DslFactory and it seems a good way to abstract steps. But if you start having a pipeline that relies on a lot of steps, where would you store the different artefacts? And where would you publish test results?

In a standard Jenkins job you get that nice overall view of the build with code coverage graphs and links to artefacts for the build. But on a pipeline there's no such page because there's no job coordinator. If on one side implementing pipelines have the advantage of making the process more clear, it makes it harder to display build trends and test results. Or iss there something I'm missing?

Also, I've just discovered that Jenkins has announced first-class support for Workflows. Which is a different way of addressing the same problem. This adds more confusion to the mix!

It would be really nice to discuss these things further. Are you contactable over any chat system (even twitter)? (or if you live in London I can just buy you a drink :) )

The final artefacts are stored in Artifactory (even the generated docker images) and I'm using workspace cloning to move them between the steps. The code quality metrics are hosted on Sonar. We are distributing the roles and dependency between dedicated tools, not relying on one central stuff. It makes the overview bit harder, but more flexible as architecture and loose coupledness.

DeleteI'm on twitter as lexandro or lexandro2000@ google's thingy... .com.

I also noticed their workflow stuff and added to my TODO list to get familiar with, because their Docker support looks more promising.

If you have time, you could look on https://github.com/flosell/lambdacd as server independent solution, but I have no idea, how the specific plugins are supported, just saved to my bookmarks :)

So the Jenkins DSL series planned to be 4 parts, then I'll write about the Git forking workflow (https://www.atlassian.com/git/tutorials/comparing-workflows/forking-workflow/) and how to support it on Jenkins side (especially the pull request verification for daily releasing).

After then I'll write about docker from dev and devops perspective, the challenges of image versioning, sharing our experiences at my company, then I explain how are we completely dockerized our Jenkins slaves. We will see how it ends up :)